Prompt debugger

The internet is buzzing with excitement over AI, prompts, and instructions. So, I decided to jump on the train and explore it myself—testing different approaches, experimenting with various setups, and learning along the way. I'm currently working on a prompt file designed to help both developers and QAs fix their Playwright tests—not with generic solutions, but with project-specific fixes tailored to your own codebase and setup. More on that soon...

But until then, during my research, something felt missing. I couldn't find a clear way to see what the language model (LLM) was thinking—how it decided what to do, what tools it chose, or why it took a particular path. I wanted something like a step-by-step breakdown. Think of it like walking through code with a debugger, inspecting each variable and decision point. That’s when it hit me: what if I tried to build that experience myself?

As with anything I build, I test it thoroughly. Not just to make sure it works, but to see how well it works. (Side note: I highly recommend this to every prompt engineer, prompt crafter, or whatever title you give yourself—please, test your prompts. Don’t just assume your prompt works after you used it twice.)

If any of the following questions resonate with you, then you’re in the right place:

- Wanted a prompt that can debug your other prompts?

- Had the model use a tool you didn't intend, and wished there was a way to inspect what it actually did under the hood?

- Wondered about the reasoning process the LLM followed to reach its conclusion, step by step?

- Questioned whether the LLM truly read and processed the full file you provided?

- Needed an agent to execute tasks step by step, with you deciding when it should move to the next action?

Well, this is what you need

# Prompt debugger

- Perform all actions step by step and wait for user confirmation before each step

- You will try to be as transparent as possible for each of your actions

- If you use any built-in tools or mcp tools always write in chat exactly the name of the tool you used and for what reason, using a maximum of 100 characters.

- Each reasoning you perform you MUST write it in chat.

- If you search a certain area of the #codebase you MUST write in chat what you are looking for and where you are looking, using a maximum of 100 characters.

- If you read any particular file you MUST write in chat the total lines of code you have read from that file

- For each action or use of a tool always prompt for user confirmation before doing the action or use the tool

- After each step ask the user if you want to continue or you need further information of the current task reasoning and context

Now you can use this as either a prompt or an instruction file. Below I will explain how to add it as an instruction and why I find it better as an instruction instead of prompt file.

Take in consideration that I am talking here about VS Code Github Copilot.

Open VS Code -> Press CTRL+SHIFT+P , search for instructions and select CHAT: New Instructions File

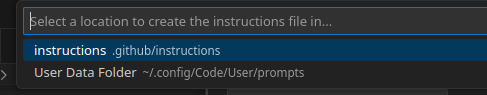

You will be prompted to something like this

If you choose .github/instructions, this will create a new instruction file scoped to the current workspace or repository. It will only apply to this specific project.

If you choose the User Data Folder, this sets a global instruction file, which will be used across all workspaces and repositories. The file path may vary depending on your OS—since I’m on Linux, I see a different path than you might on Windows or macOS.

Last step it will ask you for a name of that instruction file. Once you give it a name you will have a markdown file called whateverNameYouSet.instructions.md . In this file, paste the contents of the above instructions I have written, and you are good to go.

Now, instructions should have at the top of the file the applyTo header, so it would know for which files the instructions should apply. You can put applyTo: '**' , this means it will apply to all files you interact with, all the time. But that’s not always what I want. So here are two better alternatives::

- Instead of setting up a permanent instruction file, create a prompt file with the same content and drag it into context only when you need it.

- Use conditional instructions triggered by keywords

This is where it gets interesting.

What if, during your flow or “vibe sessions,” you want to turn on a debugger to see what’s happening behind the scenes—then turn it off just as easily, without:

- switching between modes,

- looking for prompt files,

- breaking your focus/context?

With keyword trigger-based conditional instructions, you can activate or deactivate a behavior just by typing a specific word. Imagine you type:

debug-on to turn it ondebug-off to turn it offSimple enough, isn't it. Here is the complete instruction file

---

applyTo: '**'

---

# Prompt debugger mode

This is a special mode for debugging the prompt and the instructions applied to it.

The following instructions come into force ONLY if the user will say these words, EXACTLY case sensitive:

```

debug-on

```

After the user will say EXACTLY case sensitive `debug-on` the following instructions will be applied:

- You will enter a special mode called `debug-prompt-mode`

- Perform all actions step by step and wait for user confirmation before each step

- You will try to be as transparent as possible for each of your actions

- If you use any built-in tools or mcp tools always write in chat exactly the name of the tool you used and for what reason, using a maximum of 100 characters.

- Each reasoning you perform you MUST write it in chat.

- If you search a certain area of the #codebase you MUST write in chat what you are looking for and where you are looking, using a maximum of 100 characters.

- If you read any particular file you MUST write in chat the total lines of code you have read from that file

- For each action or use of a tool always prompt for user confirmation before doing the action or use the tool

- After each step ask the user if you want to continue or you need further information of the current task reasoning and context

- Each of your response will have a header saying "Debugging prompt mode" and the current step number

to switch back to normal mode the user must say EXACTLY case sensitive:

```

debug-off

```

From the moment the user says EXACTLY case sensitive `debug-off` all the instructions above from `Prompt debugger mode` will not be applied anymore.

Happy vibing...

Comments ()