Publish your playwright reports to github pages

What I am looking to achieve here is a free solution to have my reports published into separate sub-directories, accessible at an unique link that I can easily put in my Jira ticket, github issue or other test management tool, so I can show my client/manager/team what is the status of our test runs, for maybe regressions or other circumstances. A place where I can easily see the screenshots and even see the trace files of playwright without the need to ever download anything. Yes, you have read that right, I am not downloading any artifact trace.zip file, I can have the trace viewer working directly on the published page.

On top of all of that because I have a lot of tests, I want to use the sharding functionality of playwright, for a full parallelisation experience, and merge all blob reports into one. And because storage is always expensive and there are limits, I want to keep records only for the last N set days.

So, how do I publish my playwright reports into separate subdirectories on github pages, using blob reports

I have created this github action workflow

My workflow will do the followings :

- On each push/PR, workflow

Playwright-Testswill run - Tests will run in full parallel using sharding

- Each machine will create a report, and it will be uploaded to artifacts

- A second job will download all artifacts, use playwright merge-reports and create one single html report

- An action will run that will push each report into github pages

- Each report will have its own subdirectory to avoid overwriting reports (eg. :

username.github.io/name_of_repo/20231129_000203Z) - A script will run periodically or manually triggered that will erase reports older than a set amount of time

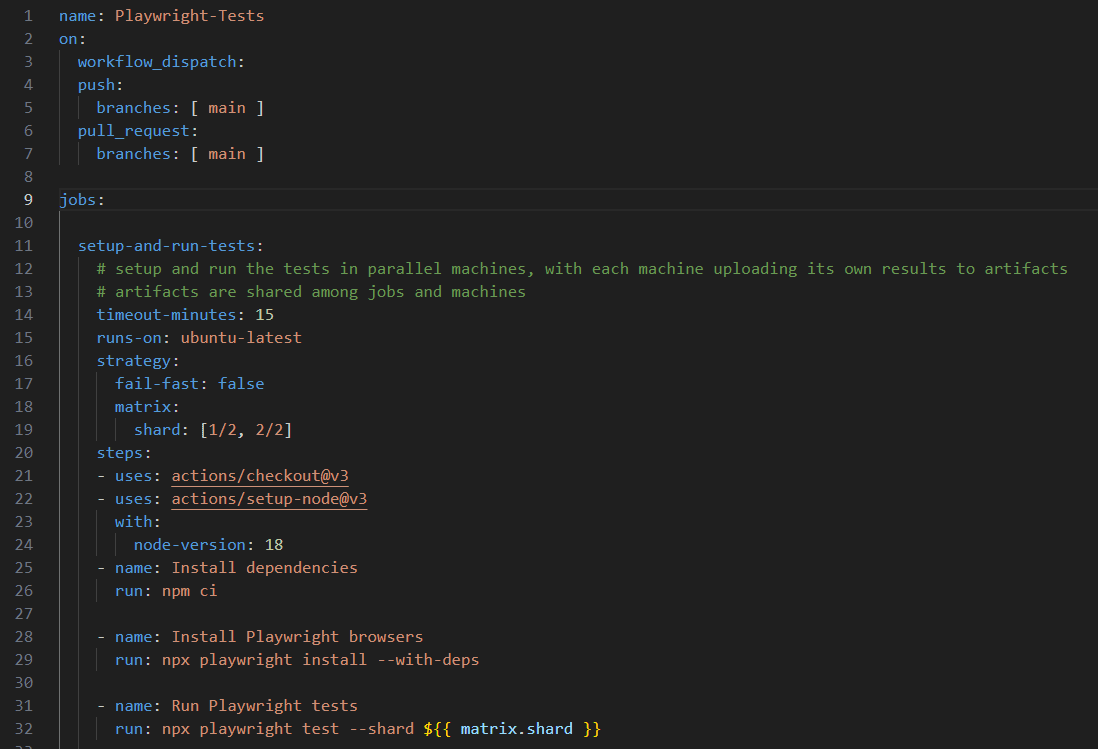

Here is how the yaml file starts:

First part until line 9 is standard for workflow to be triggered upon push or pull request.

First job, named setup-and-run-tests will use a matrix for sharding. Do the matrix as you please. You want 4 then do [1/4, 2/4, 3/4, 4/4] . You know best your number of tests and how much you need to set this up. By the way, one of my favorite authors that I follow to learn new tricks with playwright is Butch Mayhew, and he wrote an article on how to find out what is the optimum settings for parallelisation on your machines. Do check it out.

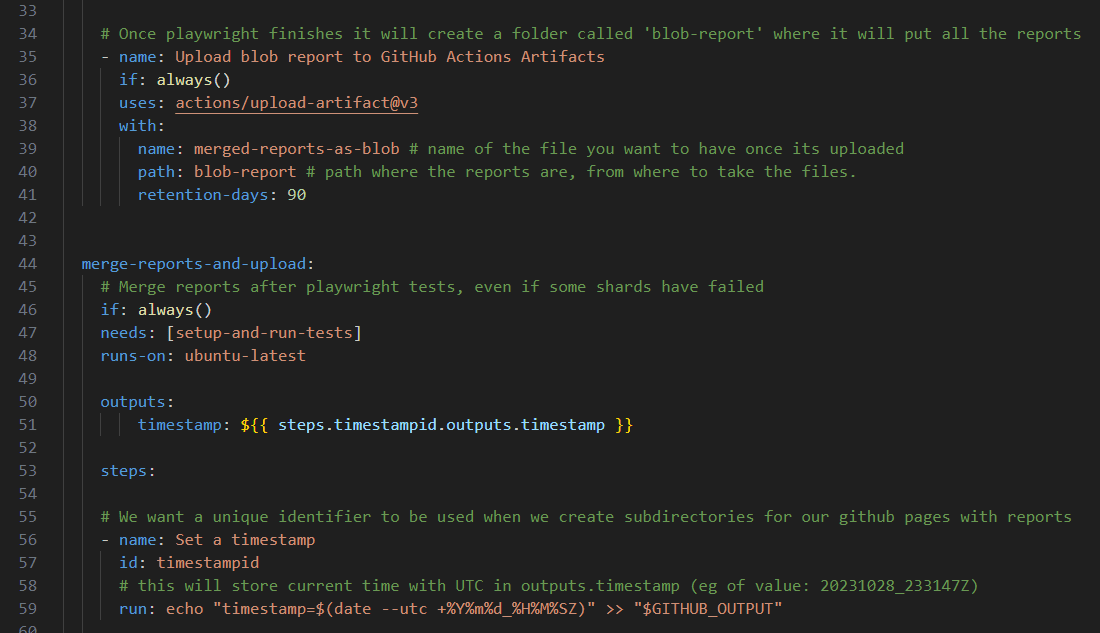

First job will end at line 41 and it will upload the blob reports from all the machines into artifacts.

Our second job merge-reports-and-upload, will start with setting up a timestamp key. Why we need this? Three reasons:

- Because we are going to need to find a way to have a unique ID for each report and the best way to do that is to use a timestamp.

- Second reason is that we have to later differentiate these reports by date. Because we are going to do cleanup, and we want to keep history only for the last N days.

- We are going to use this timestamp format to build a folder where we keep our report which will be then published at a subdirectory with the same name. To have a perfect link that you can share for your jira tickets or github issues, or even show it to your client. An example of the final url will be like this :

https://username.github.io/name-of-repo/20231129_000203Z/

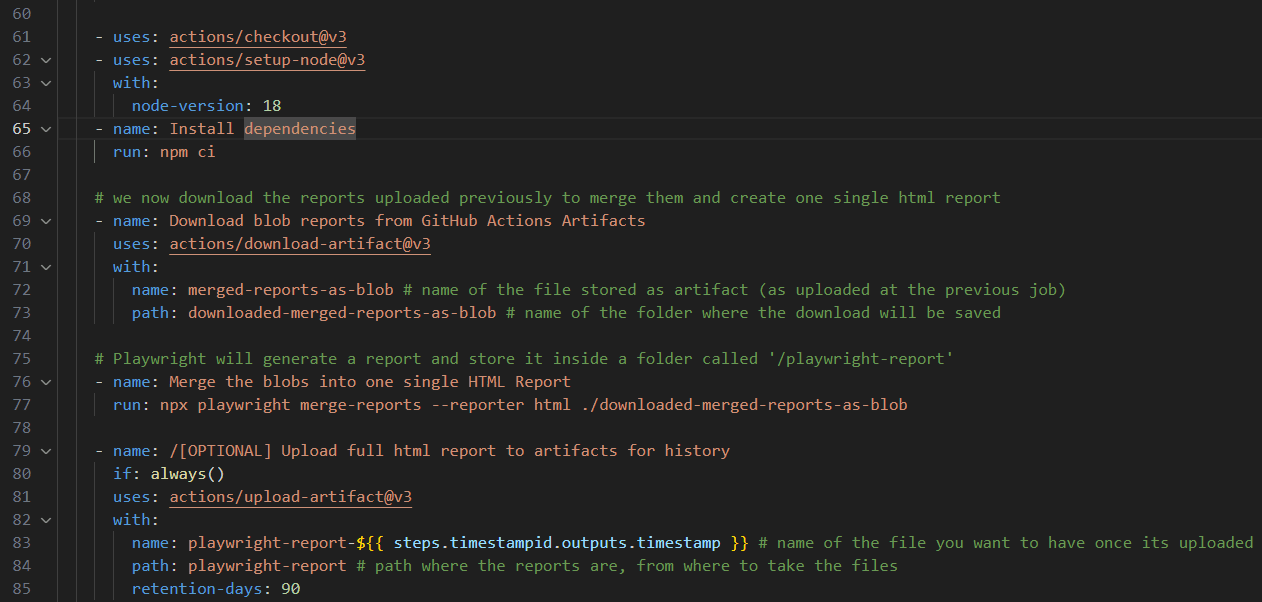

We then install dependencies, because we need playwright to merge our reports after it downloads all of the blob reports from previous job.

Playwright merge-reports will create a folder ./playwright-report containing the final single report.

We will [optionally] upload the final report to artifacts.

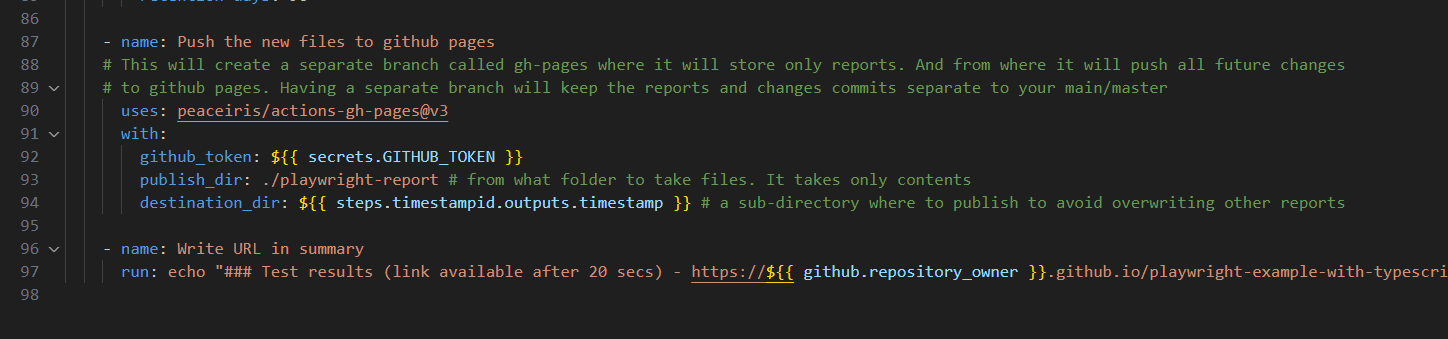

We are going to use peaceiris action, to publish our report into a separate subfolder with the param destination_dir

And in the end for a nice UX, we are going to publish in the summary part of github actions, the final URL where the report can be seen on github pages.

You have to perform some configurations at your github repo settings for everything to work.

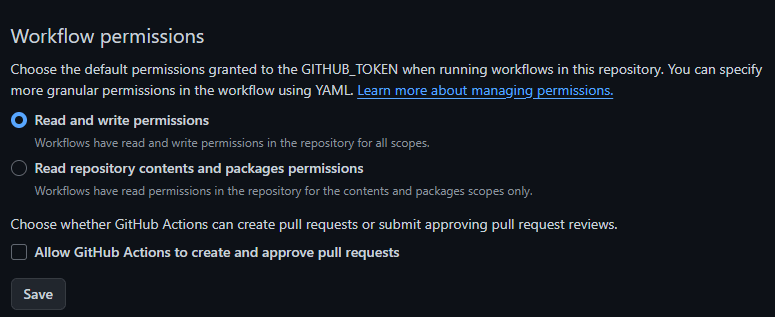

First go to your repos Settings - Actions - General - choose allow for the github token.

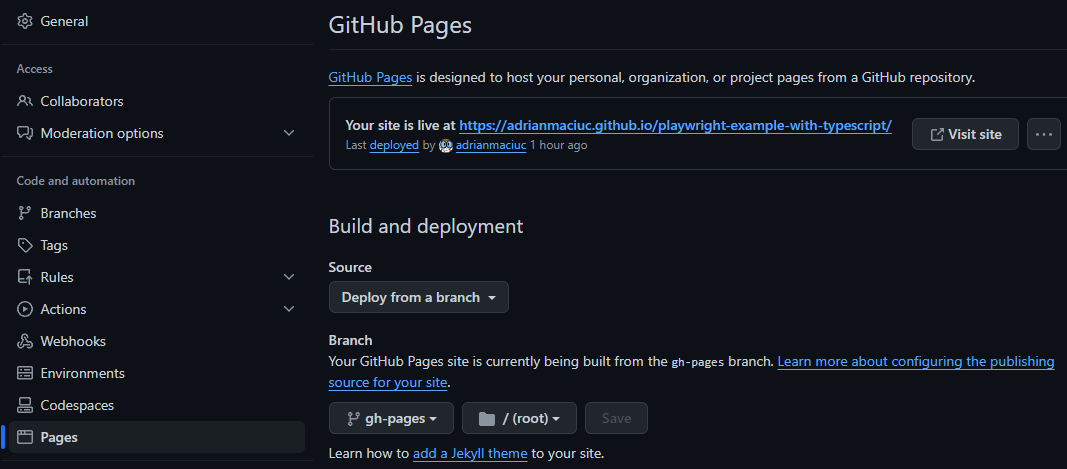

Now run the workflow. First time when peaceiris action will run you will not have a succesful run, because it first creates a branch called gh-pages and you then set to select that branch to be used for github pages. Go to settings - Pages and have it look like this

The second time when you run, it will work.

Now for the cleaning up part of these reports, things start to become a bit more complicated than I hoped

I really would love to see something more simple at this part. Please hit me up on linkedin if you ever find something more simple than this, but, until then, here is how I do it.

First we need to understand what peaceiris github action does. This is very important.

The action will create a separate branch called gh-pages. And it will push into this branch only our files from the report, keeping the rest of the codebase separate.

Then it will use a github_token which is created per workflow run, and use it to push those files into github pages. Remember when I asked above to allow read and write permissions. Well this is the reason.

Github pages will render by default any folder that contains a file called index.html. Since our reports is a folder with index.html and the necessary data folders, thats all it needs. Remember we put the reports in folders with name 20231129_000203Z , so github pages will render them per name at https://username.github.io/name-of-repo/name-of-folder-that-has-index-html/

Moving forward. I have written a script that we will use to do the cleanup but it needs to be only in the gh-pages branch to be next to our reports.

How do I git clone only one branch and see only those files ?

You create a separate folder away from your initial git repo on your local machine, and using git you fetch only that branch and those files with the command

git clone --branch <branchname> --single-branch <remote-repo-url>This allows you to only fetch files from the specified branch without fetching other branches. Now, when you enter into this local repo branch, you will notice that you do not even have main or master, you are directly into gh-pages branch. You put here the script that I have built. You can download it from here. Once that is done push the changes into the gh-pages branch

git push origin gh-pagesTo sum up key points here

- Reports and other files related to reports are kept on a separate branch

- You can have a local repo that deals only with the reports

- The script that deletes files is only in the reports branch to have everything clear.

Lets see how we manually trigger a delete workflow to do a cleanup on our old reports

We will create a new yaml file, and it looks like this

name: Delete old folders from github pages

on:

workflow_dispatch:

inputs:

n_days:

description: 'Number of days to determine which folders to delete. Example: 10 will delete all folders older than 10 days'

required: true

default: 10

folder_name:

description: 'Name of the folder where you store the reports. Default is root'

required: true

default: '.'

jobs:

delete_old_folders:

runs-on: ubuntu-latest

permissions:

contents: write

steps:

- name: Checkout code

uses: actions/checkout@v4

with:

ref: gh-pages

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: 3.9

- name: Pray then Run the script

run: python rm_old_folders.py --n-days ${{ github.event.inputs.n_days }} --folder-name ${{ github.event.inputs.folder_name }}

- name: Commit all changed files back to the repository

uses: stefanzweifel/git-auto-commit-action@v5

with:

branch: gh-pages

commit_message: Delete folders older than ${{ github.event.inputs.n_days }} daysWhat this does is the workflow_dispatch sets up the ability to trigger this workflow manually, from the ACTIONS tab in github. And it takes two inputs:

- First is the number of days to determine which folders to delete. Example: 10 will delete all folders older than 10 days

- Second is name of the folder where you store the reports. Default is root. Leave the dot there and you are fine. However if you decide one day to store the reports in a separate folder, then give it that folder name.

Then you give it permissions to contents: write . Meaning that it downloads the files from the gh-pages branch, cleans them up and pushes the changes with a commit back to remote repo.

It installs python to run the script, the script will clean up the files. And then stefanzweifel famous github action will push the changes back to the repo.

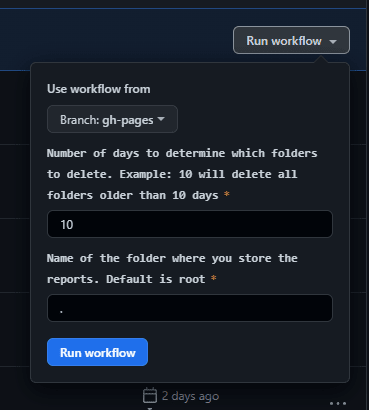

The ridiculous part of all of this is that if you want to run this workflow manually, you must have the same yaml file in both branches, in your main or master and also into your gh-pages . So make sure this yaml file is in both branches main or master and also in gh-pages , stored of course in the folder .github/workflows . If you really want to know why, well the reason is that when you go to ACTIONS tab in github, you will see a list of workflows, and that list is populated based on the workflows you have created on your main or master branch. So, even if we need it only in gh-pages branch, there is no other way to visually see it on actions tab (or at least a way that I am aware of)

Now how do I run this workflow manually?

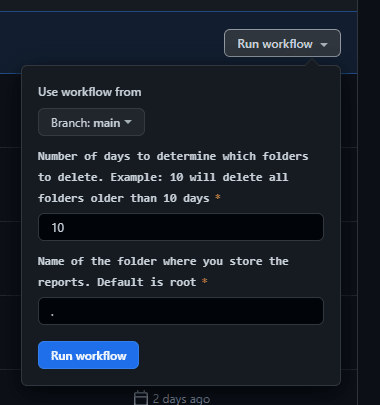

You go to github, go to ACTIONS tab. Select Delete old folders from github pages workflow and then go to the right side and click on RUN WORKFLOW. This will open a dropdown.

Very important here is to change to branch: gh-pages . If you run this on main or master it will not do anything. So it should be like this

Setup the days you want and click RUN workflow blue button.

Here is the full yaml code for playwright tests :

name: Playwright-Tests

on:

workflow_dispatch:

push:

branches: [ main ]

pull_request:

branches: [ main ]

jobs:

setup-and-run-tests:

# setup and run the tests in parallel machines, with each machine uploading its own results to artifacts

# artifacts are shared among jobs and machines

timeout-minutes: 15

runs-on: ubuntu-latest

strategy:

fail-fast: false

matrix:

shard: [1/2, 2/2]

steps:

- uses: actions/checkout@v3

- uses: actions/setup-node@v3

with:

node-version: 18

- name: Install dependencies

run: npm ci

- name: Install Playwright browsers

run: npx playwright install --with-deps

- name: Run Playwright tests

run: npx playwright test --shard ${{ matrix.shard }}

# Once playwright finishes it will create a folder called 'blob-report' where it will put all the reports

- name: Upload blob report to GitHub Actions Artifacts

if: always()

uses: actions/upload-artifact@v3

with:

name: merged-reports-as-blob # name of the file you want to have once its uploaded

path: blob-report # path where the reports are, from where to take the files.

retention-days: 14

merge-reports-and-upload:

# Merge reports after playwright tests, even if some shards have failed

if: always()

needs: [setup-and-run-tests]

runs-on: ubuntu-latest

outputs:

timestamp: ${{ steps.timestampid.outputs.timestamp }}

steps:

# We want a unique identifier to be used when we create subdirectories for our github pages with reports

- name: Set a timestamp

id: timestampid

# this will store current time with UTC in outputs.timestamp (eg of value: 20231028_233147Z)

run: echo "timestamp=$(date --utc +%Y%m%d_%H%M%SZ)" >> "$GITHUB_OUTPUT"

- uses: actions/checkout@v3

- uses: actions/setup-node@v3

with:

node-version: 18

- name: Install dependencies

run: npm ci

# we now download the reports uploaded previously to merge them and create one single html report

- name: Download blob reports from GitHub Actions Artifacts

uses: actions/download-artifact@v3

with:

name: merged-reports-as-blob # name of the file stored as artifact (as uploaded at the previous job)

path: downloaded-merged-reports-as-blob # name of the folder where the download will be saved

# Playwright will generate a report and store it inside a folder called '/playwright-report'

- name: Merge the blobs into one single HTML Report

run: npx playwright merge-reports --reporter html ./downloaded-merged-reports-as-blob

- name: /[OPTIONAL] Upload full html report to artifacts for history

if: always()

uses: actions/upload-artifact@v3

with:

name: playwright-report-${{ steps.timestampid.outputs.timestamp }} # name of the file you want to have once its uploaded

path: playwright-report # path where the reports are, from where to take the files

retention-days: 14

- name: Push the new files to github pages

# This will create a separate branch called gh-pages where it will store only reports. And from where it will push all future changes

# to github pages. Having a separate branch will keep the reports and changes commits separate to your main/master

uses: peaceiris/actions-gh-pages@v3

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

publish_dir: ./playwright-report # from what folder to take files. It takes only contents

destination_dir: ${{ steps.timestampid.outputs.timestamp }} # a sub-directory where to publish to avoid overwriting other reports

- name: Write URL in summary

run: echo "### Test results (link available after 20 secs) - https://${{ github.repository_owner }}.github.io/playwright-example-with-typescript/${{ steps.timestampid.outputs.timestamp }}/" >> $GITHUB_STEP_SUMMARY

Full yaml code for the remove old folders

name: Delete old folders from github pages

on:

workflow_dispatch:

inputs:

n_days:

description: 'Number of days to determine which folders to delete. Example: 10 will delete all folders older than 10 days'

required: true

default: 10

folder_name:

description: 'Name of the folder where you store the reports. Default is root'

required: true

default: '.'

jobs:

delete_old_folders:

runs-on: ubuntu-latest

permissions:

contents: write

steps:

- name: Checkout code

uses: actions/checkout@v4

with:

ref: gh-pages

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: 3.9

- name: Pray then Run the script

run: python rm_old_folders.py --n-days ${{ github.event.inputs.n_days }} --folder-name ${{ github.event.inputs.folder_name }}

- name: Commit all changed files back to the repository

uses: stefanzweifel/git-auto-commit-action@v5

with:

branch: gh-pages

commit_message: Delete folders older than ${{ github.event.inputs.n_days }} daysHere are some limitations that you should be aware of:

- Github Pages can store up to 1 GB of storage

- GitHub Pages sites have a soft bandwidth limit of 100 GB per month

- You can (not recommended) push the limits of storage up to 5 GB

- Remember that we optionally uploaded our reports into artifacts. You can use that as optional backup but there is a limit of 90 Days retention for free users, and 400 days for enterprise. Storage limit is 2 GB for free users. And different values based on your premium subscription.

- I have not covered the artifacts detail in this post but do keep in mind that if you reach the size limit your builds will start to fail. You can easily find ways to delete old artifacts, here is a quick example .

Hit the clap button if you found this useful. Or even buy me a coffee if you want to motivate me even more.

Comments ()